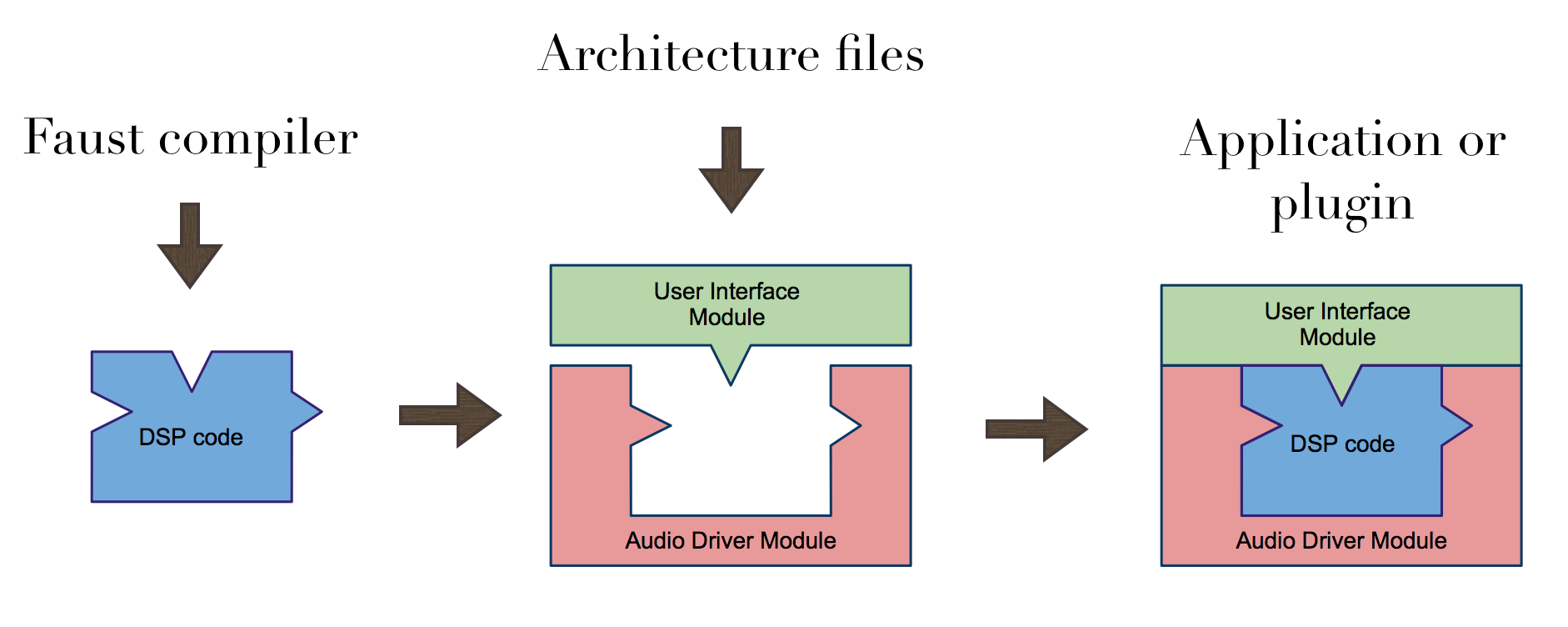

Architecture Files

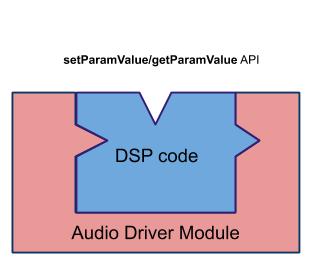

A Faust program describes a signal processor, a pure DSP computation that maps input signals to output signals. It says nothing about audio drivers or controllers (like GUI, OSC, MIDI, sensors) that are going to control the DSP. This additional information is provided by architecture files.

An architecture file describes how to relate a Faust program to the external world, in particular the audio drivers and the controllers interfaces to be used. This approach allows a single Faust program to be easily deployed to a large variety of audio standards (e.g., Max/MSP externals, PD externals, VST plugins, CoreAudio applications, JACK applications, iPhone/Android, etc.):

The architecture to be used is specified at compile time with the -a option. For example faust -a jack-gtk.cpp foo.dsp indicates to use the JACK GTK architecture when compiling foo.dsp.

Some of these architectures are a modular combination of an audio module and one or more controller modules. Some architecture only combine an audio module with the generated DSP to create an audio engine to be controlled with an additional setParamValue/getParamValue kind of API, so that the controller part can be completeley defined externally. This is the purpose of the faust2api script explained later on.

Minimal Structure of an Architecture File

Before going into the details of the architecture files provided with Faust distribution, it is important to have an idea of the essential parts that compose an architecture file. Technically, an architecture file is any text file with two placeholders <<includeIntrinsic>> and <<includeclass>>. The first placeholder is currently not used, and the second one is replaced by the code generated by the FAUST compiler.

Therefore, the really minimal architecture file, let's call it nullarch.cpp, is the following:

<<includeIntrinsic>>

<<includeclass>>

This nullarch.cpp architecture has the property that faust foo.dsp and faust -a nullarch.cpp foo.dsp produce the same result. Obviously, this is not very useful, moreover the resulting cpp file doesn't compile.

Here is miniarch.cpp, a minimal architecture file that contains enough information to produce a cpp file that can be successfully compiled:

<<includeIntrinsic>>

#define FAUSTFLOAT float

class dsp {};

struct Meta {

virtual void declare(const char* key, const char* value) {};

};

struct Soundfile {};

struct UI {

// -- widget's layouts

virtual void openTabBox(const char* label) {}

virtual void openHorizontalBox(const char* label) {}

virtual void openVerticalBox(const char* label) {}

virtual void closeBox() {}

// -- active widgets

virtual void addButton(const char* label, FAUSTFLOAT* zone) {}

virtual void addCheckButton(const char* label, FAUSTFLOAT* zone) {}

virtual void addVerticalSlider(const char* label, FAUSTFLOAT* zone, FAUSTFLOAT init, FAUSTFLOAT min, FAUSTFLOAT max, FAUSTFLOAT step) {}

virtual void addHorizontalSlider(const char* label, FAUSTFLOAT* zone, FAUSTFLOAT init, FAUSTFLOAT min, FAUSTFLOAT max, FAUSTFLOAT step) {}

virtual void addNumEntry(const char* label, FAUSTFLOAT* zone, FAUSTFLOAT init, FAUSTFLOAT min, FAUSTFLOAT max, FAUSTFLOAT step) {}

// -- passive widgets

virtual void addHorizontalBargraph(const char* label, FAUSTFLOAT* zone, FAUSTFLOAT min, FAUSTFLOAT max) {}

virtual void addVerticalBargraph(const char* label, FAUSTFLOAT* zone, FAUSTFLOAT min, FAUSTFLOAT max) {}

// -- soundfiles

virtual void addSoundfile(const char* label, const char* filename, Soundfile** sf_zone) {}

// -- metadata declarations

virtual void declare(FAUSTFLOAT* zone, const char* key, const char* val) {}

};

<<includeclass>>

This architecture is still not very useful, but it gives an idea of what a real-life architecture file has to implement, in addition to the audio part itself. As we will see in the next section, Faust architectures are implemented using a modular approach to avoid code duplication and favor code maintenance and reuse.

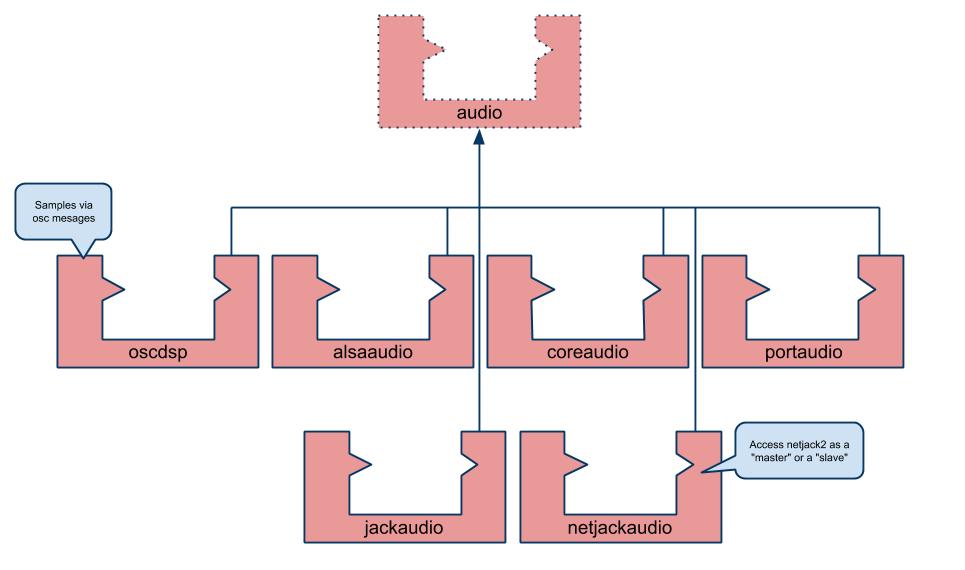

Audio Architecture Modules

A Faust generated program has to connect to a underlying audio layer. Depending if the final program is a application or plugin, the way to connect to this audio layer will differ:

- applications typically use the OS audio driver API, which will be CoreAudio on macOS, ALSA on Linux, WASAPI on Windows for instance, or any kind of multi-platforms API like PortAudio or JACK. In this case a subclass of the base class

audio(see later) has to be written - plugins (like VST3, Audio Unit or JUCE for instance) usually have to follow a more constrained API which imposes a life cyle, something like loading/initializing/starting/running/stopping/unloading sequence of operations. In this case the Faust generated module new/init/compute/delete methods have to be inserted in the plugin API, by calling each module function at the appropriate place.

External and internal audio sample formats

Audio samples are managed by the underlying audio layer, typically as 32 bits float or 64 bits double values in the [-1..1] interval. Their format is defined with the FAUSTFLOAT macro implemented in the architecture file as float by default. The DSP audio samples format is choosen at compile time, with the -single (= default), -double or -quad compilation option. Control parameters like buttons, sliders... also use the FAUSTFLOAT format.

By default, the FAUSTFLOAT macro is written with the following code:

#ifndef FAUSTFLOAT

#define FAUSTFLOAT float

#endif

which gives it a value (if not already defined), and since the default internal format is float, nothing special has to be done in the general case. But when the DSP is compiled using the -double option, the audio inputs/outputs buffers have to be adapted, with a dsp_sample_adapter class, for instance like in the dynamic-jack-gt tool.

Note that an architecture may redefine FAUSTFLOAT in double, and have the complete audio chain running in double. This has to be done before including any architecture file that would define FAUSTFLOAT itself (because of the #ifndef logic).

Connection to an audio driver API

An audio driver architecture typically connects a Faust program to the audio drivers. It is responsible for:

- allocating and releasing the audio channels and presenting the audio as non-interleaved float/double data (depending of the

FAUSTFLOATmacro definition), normalized between -1.0 and 1.0 - calling the DSP

initmethod at init time, to setup thema.SRvariable possibly used in the DSP code - calling the DSP

computemethod to handle incoming audio buffers and/or to produce audio outputs.

The default compilation model uses separated audio input and output buffers not referring to the same memory locations. The -inpl (--in-place) code generation model allows us to generate code working when input and output buffers are the same (which is typically needed in some embedded devices). This option currently only works in scalar (= default) code generation mode.

A Faust audio architecture module derives from an audio class can be defined as below (simplified version, see the real version here):

class audio {

public:

audio() {}

virtual ~audio() {}

/**

* Init the DSP.

* @param name - the DSP name to be given to the audio driven

* (could appear as a JACK client for instance)

* @param dsp - the dsp that will be initialized with the driver sample rate

*

* @return true is sucessful, false if case of driver failure.

**/

virtual bool init(const char* name, dsp* dsp) = 0;

/**

* Start audio processing.

* @return true is sucessfull, false if case of driver failure.

**/

virtual bool start() = 0;

/**

* Stop audio processing.

**/

virtual void stop() = 0;

void setShutdownCallback(shutdown_callback cb, void* arg) = 0;

// Return buffer size in frames.

virtual int getBufferSize() = 0;

// Return the driver sample rate in Hz.

virtual int getSampleRate() = 0;

// Return the driver hardware inputs number.

virtual int getNumInputs() = 0;

// Return the driver hardware outputs number.

virtual int getNumOutputs() = 0;

/**

* @return Returns the average proportion of available CPU

* being spent inside the audio callbacks (between 0.0 and 1.0).

**/

virtual float getCPULoad() = 0;

};

The API is simple enough to give a great flexibility to audio architectures implementations. The init method should initialize the audio. At init exit, the system should be in a safe state to recall the dsp object state. Here is the hierarchy of some of the supported audio drivers:

Connection to a plugin audio API

In the case of plugin, an audio plugin architecture has to be developed, by integrating the Faust DSP new/init/compute/delete methods in the plugin API. Here is a concrete example using the JUCE framework:

-

a FaustPlugInAudioProcessor class, subclass of the

juce::AudioProcessorhas to be defined. The Faust generated C++ instance will be created in its constructor, either in monophonic of polyphonic mode (see later sections) -

the Faust DSP instance is initialized in the JUCE

prepareToPlaymethod using the current sample rate value -

the Faust dsp

computeis called in the JUCEprocesswhich receives the audio inputs/outputs buffers to be processed -

additional methods can possibly be implemented to handle MIDI messages or save/restore the plugin parameters state for instance.

This methodology obviously has to be adapted for each supported plugin API.

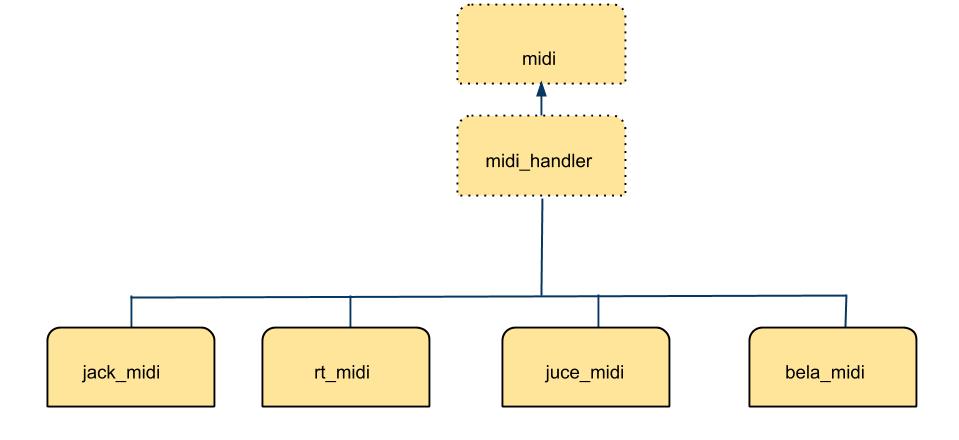

MIDI Architecture Modules

A MIDI architecture module typically connects a Faust program to the MIDI drivers. MIDI control connects DSP parameters with MIDI messages (in both directions), and can be used to trigger polyphonic instruments.

MIDI Messages in the DSP Source Code

MIDI control messages are described as metadata in UI elements. They are decoded by a MidiUI class, subclass of UI, which parses incoming MIDI messages and updates the appropriate control parameters, or sends MIDI messages when the UI elements (sliders, buttons...) are moved.

Defined Standard MIDI Messages

A special [midi:xxx yyy...] metadata needs to be added to the UI element. The full description of supported MIDI messages is part of the Faust documentation.

MIDI Classes

A midi base class defining MIDI messages decoding/encoding methods has been developed. It will be used to receive and transmit MIDI messages:

class midi {

public:

midi() {}

virtual ~midi() {}

// Additional timestamped API for MIDI input

virtual MapUI* keyOn(double, int channel, int pitch, int velocity)

{

return keyOn(channel, pitch, velocity);

}

virtual void keyOff(double, int channel, int pitch, int velocity = 0)

{

keyOff(channel, pitch, velocity);

}

virtual void keyPress(double, int channel, int pitch, int press)

{

keyPress(channel, pitch, press);

}

virtual void chanPress(double date, int channel, int press)

{

chanPress(channel, press);

}

virtual void pitchWheel(double, int channel, int wheel)

{

pitchWheel(channel, wheel);

}

virtual void ctrlChange(double, int channel, int ctrl, int value)

{

ctrlChange(channel, ctrl, value);

}

virtual void ctrlChange14bits(double, int channel, int ctrl, int value)

{

ctrlChange14bits(channel, ctrl, value);

}

virtual void rpn(double, int channel, int ctrl, int value)

{

rpn(channel, ctrl, value);

}

virtual void progChange(double, int channel, int pgm)

{

progChange(channel, pgm);

}

virtual void sysEx(double, std::vector<unsigned char>& message)

{

sysEx(message);

}

// MIDI sync

virtual void startSync(double date) {}

virtual void stopSync(double date) {}

virtual void clock(double date) {}

// Standard MIDI API

virtual MapUI* keyOn(int channel, int pitch, int velocity) { return nullptr; }

virtual void keyOff(int channel, int pitch, int velocity) {}

virtual void keyPress(int channel, int pitch, int press) {}

virtual void chanPress(int channel, int press) {}

virtual void ctrlChange(int channel, int ctrl, int value) {}

virtual void ctrlChange14bits(int channel, int ctrl, int value) {}

virtual void rpn(int channel, int ctrl, int value) {}

virtual void pitchWheel(int channel, int wheel) {}

virtual void progChange(int channel, int pgm) {}

virtual void sysEx(std::vector<unsigned char>& message) {}

enum MidiStatus {

// channel voice messages

MIDI_NOTE_OFF = 0x80,

MIDI_NOTE_ON = 0x90,

MIDI_CONTROL_CHANGE = 0xB0,

MIDI_PROGRAM_CHANGE = 0xC0,

MIDI_PITCH_BEND = 0xE0,

MIDI_AFTERTOUCH = 0xD0, // aka channel pressure

MIDI_POLY_AFTERTOUCH = 0xA0, // aka key pressure

MIDI_CLOCK = 0xF8,

MIDI_START = 0xFA,

MIDI_CONT = 0xFB,

MIDI_STOP = 0xFC,

MIDI_SYSEX_START = 0xF0,

MIDI_SYSEX_STOP = 0xF7

};

enum MidiCtrl {

ALL_NOTES_OFF = 123,

ALL_SOUND_OFF = 120

};

enum MidiNPN {

PITCH_BEND_RANGE = 0

};

};

A pure interface for MIDI handlers that can send/receive MIDI messages to/from midiobjects is defined:

struct midi_interface {

virtual void addMidiIn(midi* midi_dsp) = 0;

virtual void removeMidiIn(midi* midi_dsp) = 0;

virtual ~midi_interface() {}

};

A midi_hander subclass implements actual MIDI decoding and maintains a list of MIDI aware components (classes inheriting from midi and ready to send and/or receive MIDI events) using the addMidiIn/removeMidiIn methods:

class midi_handler : public midi, public midi_interface {

protected:

std::vector<midi*> fMidiInputs;

std::string fName;

MidiNRPN fNRPN;

public:

midi_handler(const std::string& name = "MIDIHandler"):fName(name) {}

virtual ~midi_handler() {}

void addMidiIn(midi* midi_dsp) {...}

void removeMidiIn(midi* midi_dsp) {...}

...

...

};

Several concrete implementations subclassing midi_handler using native APIs have been written and can be found in the faust/midi folder:

Depending on the native MIDI API being used, event timestamps are either expressed in absolute time or in frames. They are converted to offsets expressed in samples relative to the beginning of the audio buffer.

Connected with the MidiUI class (a subclass of UI), they allow a given DSP to be controlled with incoming MIDI messages or possibly send MIDI messages when its internal control state changes.

In the following piece of code, a MidiUI object is created and connected to a rt_midi MIDI messages handler (using the RTMidi library), then given as a parameter to the standard buildUserInterface to control DSP parameters:

...

rt_midi midi_handler("MIDI");

MidiUI midi_interface(&midi_handler);

DSP->buildUserInterface(&midi_interface);

...

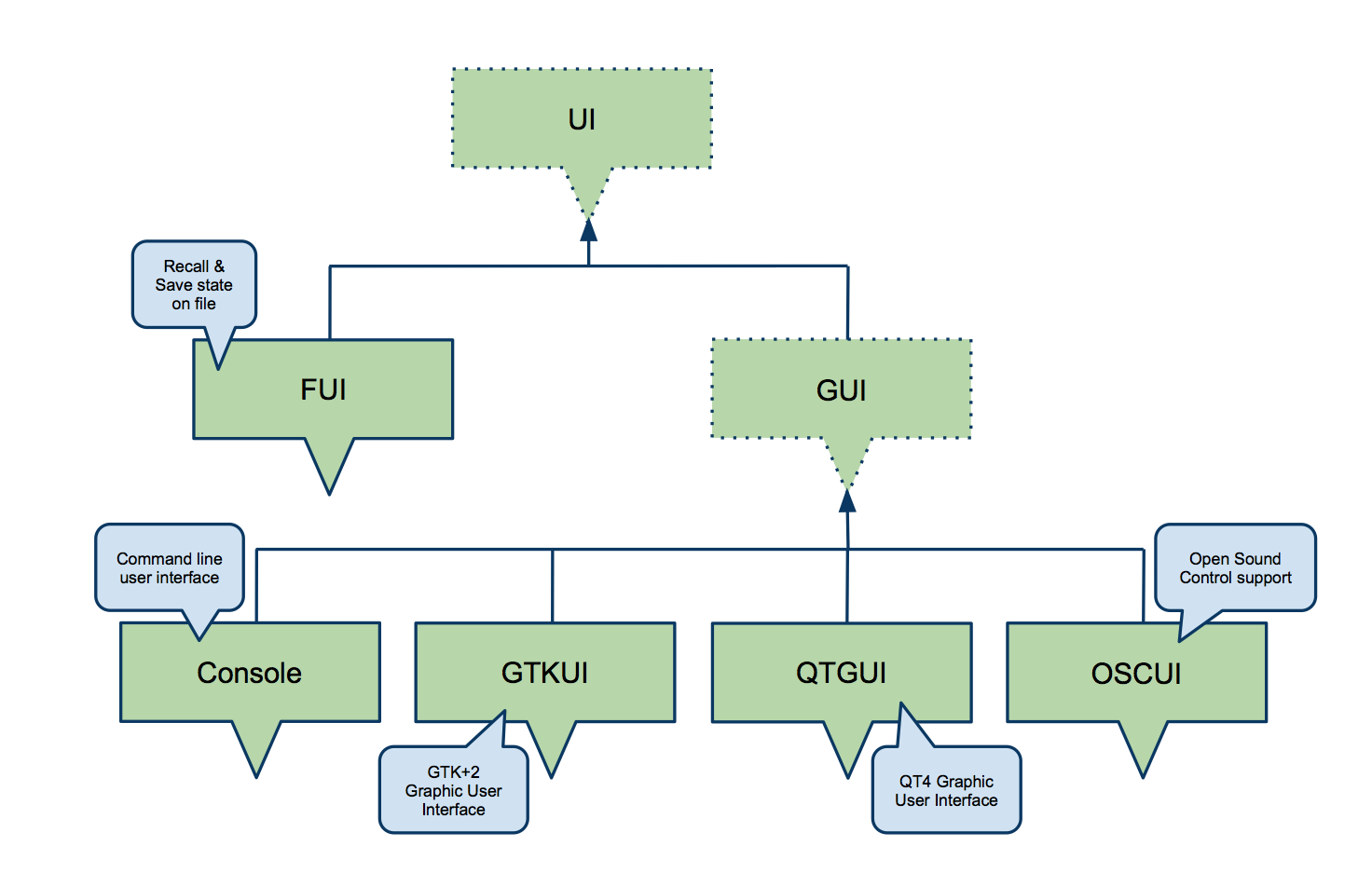

UI Architecture Modules

A UI architecture module links user actions (i.e., via graphic widgets, command line parameters, OSC messages, etc.) with the Faust program to control. It is responsible for associating program parameters to user interface elements and to update parameter’s values according to user actions. This association is triggered by the buildUserInterface call, where the dsp asks a UI object to build the DSP module controllers.

Since the interface is basically graphic-oriented, the main concepts are widget based: an UI architecture module is semantically oriented to handle active widgets, passive widgets and widgets layout.

A Faust UI architecture module derives the UI base class:

template <typename REAL>

struct UIReal {

UIReal() {}

virtual ~UIReal() {}

// -- widget's layouts

virtual void openTabBox(const char* label) = 0;

virtual void openHorizontalBox(const char* label) = 0;

virtual void openVerticalBox(const char* label) = 0;

virtual void closeBox() = 0;

// -- active widgets

virtual void addButton(const char* label, REAL* zone) = 0;

virtual void addCheckButton(const char* label, REAL* zone) = 0;

virtual void addVerticalSlider(const char* label, REAL* zone, REAL init,

REAL min, REAL max, REAL step) = 0;

virtual void addHorizontalSlider(const char* label, REAL* zone, REAL init,

REAL min, REAL max, REAL step) = 0;

virtual void addNumEntry(const char* label, REAL* zone, REAL init,

REAL min, REAL max, REAL step) = 0;

// -- passive widgets

virtual void addHorizontalBargraph(const char* label, REAL* zone, REAL min, REAL max) = 0;

virtual void addVerticalBargraph(const char* label, REAL* zone, REAL min, REAL max) = 0;

// -- soundfiles

virtual void addSoundfile(const char* label, const char* filename, Soundfile** sf_zone) = 0;

// -- metadata declarations

virtual void declare(REAL* zone, const char* key, const char* val) {}

};

struct UI : public UIReal<FAUSTFLOAT>

{

UI() {}

virtual ~UI() {}

};

The FAUSTFLOAT* zone element is the primary connection point between the control interface and the dsp code. The compiled dsp Faust code will give access to all internal control value addresses used by the dsp code by calling the approriate addButton, addVerticalSlider, addNumEntry etc. methods (depending of what is described in the original Faust DSP source code).

The control/UI code keeps those addresses, and will typically change their pointed values each time a control value in the dsp code has to be changed. On the dsp side, all control values are sampled once at the beginning of the compute method, so that to keep the same value during the entire audio buffer.

Writing and reading the control values is typically done in two different threads: the controller (a GUI, an OSC or MIDI.etc. one) write the values, and the audio real-time thread read them in the audio callback. Since writing/reading the FAUSTFLOAT* zone element is atomic, there is no need (in general) of complex synchronization mechanism between the writer (controller) and the reader (the Faust dsp object).

Here is part of the UI classes hierarchy:

Active Widgets

Active widgets are graphical elements controlling a parameter value. They are initialized with the widget name and a pointer to the linked value, using the FAUSTFLOAT macro type (defined at compile time as either float or double). Active widgets in Faust are Button, CheckButton, VerticalSlider, HorizontalSlider and NumEntry.

A GUI architecture must implement a method addXxx(const char* name, FAUSTFLOAT* zone, ...) for each active widget. Additional parameters are available for Slider and NumEntry: the init, min, max and step values.

Passive Widgets

Passive widgets are graphical elements reflecting values. Similarly to active widgets, they are initialized with the widget name and a pointer to the linked value. Passive widgets in Faust are HorizontalBarGraph and VerticalBarGraph.

A UI architecture must implement a method addXxx(const char* name, FAUSTFLOAT* zone, ...) for each passive widget. Additional parameters are available, depending on the passive widget type.

Widgets Layout

Generally, a GUI is hierarchically organized into boxes and/or tab boxes. A UI architecture must support the following methods to setup this hierarchy:

openTabBox(const char* label);

openHorizontalBox(const char* label);

openVerticalBox(const char* label);

closeBox(const char* label);

Note that all the widgets are added to the current box.

Metadata

The Faust language allows widget labels to contain metadata enclosed in square brackets as key/value pairs. These metadata are handled at GUI level by a declare method taking as argument, a pointer to the widget associated zone, the metadata key and value:

declare(FAUSTFLOAT* zone, const char* key, const char* value);

Here is the table of currently supported general medadata:

| Key | Value |

|---|---|

| tooltip | actual string content |

| hidden | 0 or 1 |

| unit | Hz or dB |

| scale | log or exp |

| style | knob or led or numerical |

| style | radio{’label1’:v1;’label2’:v2...} |

| style | menu{’label1’:v1;’label2’:v2...} |

| acc | axe curve amin amid amax |

| gyr | axe curve amin amid amax |

| screencolor | red or green or blue or white |

Here acc means accelerometer and gyr means gyroscope, both use the same parameters (a mapping description) but are linked to different sensors.

Some typical example where several metadata are defined could be:

nentry("freq [unit:Hz][scale:log][acc:0 0 -30 0 30][style:menu{’white noise’:0;’pink noise’:1;’sine’:2}][hidden:0]", 0, 20, 100, 1)

or:

vslider("freq [unit:dB][style:knob][gyr:0 0 -30 0 30]", 0, 20, 100, 1)

When one or several metadata are added in the same item label, then will appear in the generated code as one or successives declare(FAUSTFLOAT* zone, const char* key, const char* value); lines before the line describing the item itself. Thus the UI managing code has to associate them with the proper item. Look at the MetaDataUI class for an example of this technique.

MIDI specific metadata are described here and are decoded the MidiUI class.

Note that medatada are not supported in all architecture files. Some of them like (acc or gyr for example) only make sense on platforms with accelerometers or gyroscopes sensors. The set of medatada may be extended in the future and can possibly be adapted for a specific project. They can be decoded using the MetaDataUIclass.

Graphic-oriented, pure controllers, code generator UI

Even if the UI architecture module is graphic-oriented, a given implementation can perfectly choose to ignore all layout information and only keep the controller ones, like the buttons, sliders, nentries, bargraphs. This is typically what is done in the MidiUI or OSCUI architectures.

Note that pure code generator can also be written. The JSONUI UI architecture is an example of an architecture generating the DSP JSON description as a text file.

DSP JSON Description

The full description of a given compiled DSP can be generated as a JSON file, to be used at several places in the architecture system. This JSON describes the DSP with its inputs/outputs number, some metadata (filename, name, used compilation parameters, used libraries etc.) as well as its UI with a hierarchy of groups up to terminal items (buttons, sliders, nentries, bargraphs) with all their parameters (type, label, shortname, address, meta, init, min, max and step values). For the following DSP program:

import("stdfaust.lib");

vol = hslider("volume [unit:dB]", 0, -96, 0, 0.1) : ba.db2linear : si.smoo;

freq = hslider("freq [unit:Hz]", 600, 20, 2000, 1);

process = vgroup("Oscillator", os.osc(freq) * vol) <: (_,_);

The generated JSON file is then:

{

"name": "foo",

"filename": "foo.dsp",

"version": "2.40.8",

"compile_options": "-lang cpp -es 1 -mcd 16 -single -ftz 0",

"library_list": [],

"include_pathnames": ["/usr/local/share/faust"],

"inputs": 0,

"outputs": 2,

"meta": [

{ "basics.lib/name": "Faust Basic Element Library" },

{ "basics.lib/version": "0.6" },

{ "compile_options": "-lang cpp -es 1 -mcd 16 -single -ftz 0" },

{ "filename": "foo.dsp" },

{ "maths.lib/author": "GRAME" },

{ "maths.lib/copyright": "GRAME" },

{ "maths.lib/license": "LGPL with exception" },

{ "maths.lib/name": "Faust Math Library" },

{ "maths.lib/version": "2.5" },

{ "name": "tes" },

{ "oscillators.lib/name": "Faust Oscillator Library" },

{ "oscillators.lib/version": "0.3" },

{ "platform.lib/name": "Generic Platform Library" },

{ "platform.lib/version": "0.2" },

{ "signals.lib/name": "Faust Signal Routing Library" },

{ "signals.lib/version": "0.1" }

],

"ui": [

{

"type": "vgroup",

"label": "Oscillator",

"items": [

{

"type": "hslider",

"label": "freq",

"shortname": "freq",

"address": "/Oscillator/freq",

"meta": [

{ "unit": "Hz" }

],

"init": 600,

"min": 20,

"max": 2000,

"step": 1

},

{

"type": "hslider",

"label": "volume",

"shortname": "volume",

"address": "/Oscillator/volume",

"meta": [

{ "unit": "dB" }

],

"init": 0,

"min": -96,

"max": 0,

"step": 0.1

}

]

}

]

}

The JSON file can be generated with faust -json foo.dsp command, or programmatically using the JSONUI UI architecture (see next Some Useful UI Classes and Tools for Developers section).

Here is the description of ready-to-use UI classes, followed by classes to be used in developer code:

GUI Builders

Here is the description of the main GUI classes:

- the GTKUI class uses the GTK toolkit to create a Graphical User Interface with a proper group-based layout

- the QTUI class uses the QT toolkit to create a Graphical User Interface with a proper group based layout

- the JuceUI class uses the JUCE framework to create a Graphical User Interface with a proper group based layout

Non-GUI Controllers

Here is the description of the main non-GUI controller classes:

- the OSCUI class implements OSC remote control in both directions

- the httpdUI class implements HTTP remote control using the libmicrohttpd library to embed a HTTP server inside the application. Then by opening a browser on a specific URL, the GUI will appear and allow to control the distant application or plugin. The connection works in both directions

- the MIDIUI class implements MIDI control in both directions, and it explained more deeply later on

Some Useful UI Classes and Tools for Developers

Some useful UI classes and tools can possibly be reused in developer code:

- the MapUI class establishes a mapping beween UI items and their labels, shortname or paths, and offers a

setParamValue/getParamValueAPI to set and get their values. It uses an helper PathBuilder class to create complete shortnames and pathnames to the leaves in the UI hierarchy. Note that the item path encodes the UI hierarchy in the form of a /group1/group2/.../label string and is the way to distinguish control that may have the same label, but different localisation in the UI tree. Using shortnames (built so that they never collide) is an alternative way to access items. ThesetParamValue/getParamValueAPI takes either labels, shortname or paths as the way to describe the control, but using shortnames or paths is the safer way to use it - the extended APIUI offers

setParamValue/getParamValueAPI similar toMapUI, with additional methods to deal with accelerometer/gyroscope kind of metadata - the MetaDataUI class decodes all currently supported metadata and can be used to retrieve their values

- the JSONUI class allows us to generate the JSON description of a given DSP

- the JSONUIDecoder class is used to decode the DSP JSON description and implement its

buildUserInterfaceandmetadatamethods - the FUI class allows us to save and restore the parameters state as a text file

- the SoundUI class with the associated Soundfile class is used to implement the

soundfileprimitive, and load the described audio resources (typically audio files), by using different concrete implementations, either using libsndfile (with the LibsndfileReader.h file), or JUCE (with the JuceReader file). Paths to sound files can be absolute, but it should be noted that a relative path mechanism can be set up when creating an instance ofSoundUI, in order to load sound files with a more flexible strategy. - the ControlSequenceUI class with the associated

OSCSequenceReaderclass allow to control parameters change in time, using the OSC time tag format. Changing the control values will have to be mixed with audio rendering. Look at the sndfile.cpp use-case. - the ValueConverter file contains several mapping classes used to map user interface values (for example a gui slider delivering values between 0 and 1) to Faust values (for example a

vsliderbetween 20 and 2000) using linear/log/exp scales. It also provides classes to handle the[acc:a b c d e]and[gyr:a b c d e]Sensors Control Metadatas.

Multi-Controller and Synchronization

A given DSP can perfectly be controlled by several UI classes at the same time, and they will all read and write the same DSP control memory zones. Here is an example of code using a GUI using GTKUI architecture, as well as OSC control using OSCUI:

...

GTKUI gtk_interface(name, &argc, &argv);

DSP->buildUserInterface(>k_interface);

OSCUI osc_interface(name, argc, argv);

DSP->buildUserInterface(&osc_interface);

...

Since several controller access the same values, you may have to synchronize them, in order for instance to have the GUI sliders or buttons reflect the state that would have been changed by the OSCUI controller at reception time, of have OSC messages been sent each time UI items like sliders or buttons are moved.

This synchronization mecanism is implemented in a generic way in the GUI class. First theuiItemBase class is defined as the basic synchronizable memory zone, then grouped in a list controlling the same zone from different GUI instances. The uiItemBase::modifyZone method is used to change the uiItemBase state at reception time, and uiItemBase::reflectZonewill be called to reflect a new value, and can change the Widget layout for instance, or send a message (OSC, MIDI...).

All classes needing to use this synchronization mechanism will have to subclass the GUI class, which keeps all of them at runtime in a static class GUI::fGuiList variable. This is the case for the previously used GTKUI and OSCUI classes. Note that when using the GUI class, the 2 following static class variables have to be defined in the code, (once in one .cpp file in the project) like in this code example:

// Globals

std::list<GUI*> GUI::fGuiList;

ztimedmap GUI::gTimedZoneMap;

Finally the static GUI::updateAllGuis() synchronization method will have to be called regularly, in the application or plugin event management loop, or in a periodic timer for instance. This is typically implemented in the GUI::run method which has to be called to start event or messages processing.

In the following code, the OSCUI::run method is called first to start processing OSC messages, then the blocking GTKUI::run method, which opens the GUI window, to be closed to finally finish the application:

...

// Start OSC messages processing

osc_interface.run();

// Start GTK GUI as the last one, since it blocks until the opened window is closed

gtk_interface.run()

...

DSP Architecture Modules

The Faust compiler produces a DSP module whose format will depend of the chosen backend: a C++ class with the -lang cpp option, a data structure with associated functions with the -lang c option, an LLVM IR module with the -lang llvm option, a WebAssembly binary module with the -lang wasm option, a bytecode stream with the -lang interp option... and so on.

The Base dsp Class

In C++, the generated class derives from a base dsp class:

class dsp {

public:

dsp() {}

virtual ~dsp() {}

/* Return instance number of audio inputs */

virtual int getNumInputs() = 0;

/* Return instance number of audio outputs */

virtual int getNumOutputs() = 0;

/**

* Trigger the ui_interface parameter with instance specific calls

* to 'openTabBox', 'addButton', 'addVerticalSlider'... in order to build the UI.

*

* @param ui_interface - the user interface builder

*/

virtual void buildUserInterface(UI* ui_interface) = 0;

/* Return the sample rate currently used by the instance */

virtual int getSampleRate() = 0;

/**

* Global init, calls the following methods:

* - static class 'classInit': static tables initialization

* - 'instanceInit': constants and instance state initialization

*

* @param sample_rate - the sampling rate in Hz

*/

virtual void init(int sample_rate) = 0;

/**

* Init instance state

*

* @param sample_rate - the sampling rate in Hz

*/

virtual void instanceInit(int sample_rate) = 0;

/**

* Init instance constant state

*

* @param sample_rate - the sampling rate in HZ

*/

virtual void instanceConstants(int sample_rate) = 0;

/* Init default control parameters values */

virtual void instanceResetUserInterface() = 0;

/* Init instance state (like delay lines..) but keep the control parameter values */

virtual void instanceClear() = 0;

/**

* Return a clone of the instance.

*

* @return a copy of the instance on success, otherwise a null pointer.

*/

virtual dsp* clone() = 0;

/**

* Trigger the Meta* parameter with instance specific calls to 'declare'

* (key, value) metadata.

*

* @param m - the Meta* meta user

*/

virtual void metadata(Meta* m) = 0;

/**

* DSP instance computation, to be called with successive in/out audio buffers.

*

* @param count - the number of frames to compute

* @param inputs - the input audio buffers as an array of non-interleaved

* FAUSTFLOAT samples (eiher float, double or quad)

* @param outputs - the output audio buffers as an array of non-interleaved

* FAUSTFLOAT samples (eiher float, double or quad)

*

*/

virtual void compute(int count, FAUSTFLOAT** inputs, FAUSTFLOAT** outputs) = 0;

/**

* Alternative DSP instance computation method for use by subclasses, incorporating an additional `date_usec` parameter,

* which specifies the timestamp of the first sample in the audio buffers.

*

* @param date_usec - the timestamp in microsec given by audio driver. By convention timestamp of -1 means 'no timestamp conversion',

* events already have a timestamp expressed in frames.

* @param count - the number of frames to compute

* @param inputs - the input audio buffers as an array of non-interleaved

* FAUSTFLOAT samples (either float, double or quad)

* @param outputs - the output audio buffers as an array of non-interleaved

* FAUSTFLOAT samples (either float, double or quad)

*

*/

virtual void compute(double date_usec, int count,

FAUSTFLOAT** inputs,

FAUSTFLOAT** outputs) = 0;

};

The dsp class is central to the Faust architecture design:

- the

getNumInputs,getNumOutputsmethods provides information about the signal processor - the

buildUserInterfacemethod creates the user interface using a given UI class object (see later) - the

initmethod (and some more specialized methods likeinstanceInit,instanceConstants, etc.) is called to initialize the dsp object with a given sampling rate, typically obtained from the audio architecture - the

computemethod is called by the audio architecture to execute the actual audio processing. It takes acountnumber of samples to process, andinputsandoutputsarrays of non-interleaved float/double samples, to be allocated and handled by the audio driver with the required dsp input and outputs channels (as given bygetNumInputsandgetNumOutputs) - the

clonemethod can be used to duplicate the instance - the

metadata(Meta* m)method can be called with aMetaobject to decode the instance global metadata (see next section)

(note that FAUSTFLOAT label is typically defined to be the actual type of sample: either float or double using #define FAUSTFLOAT float in the code for instance).

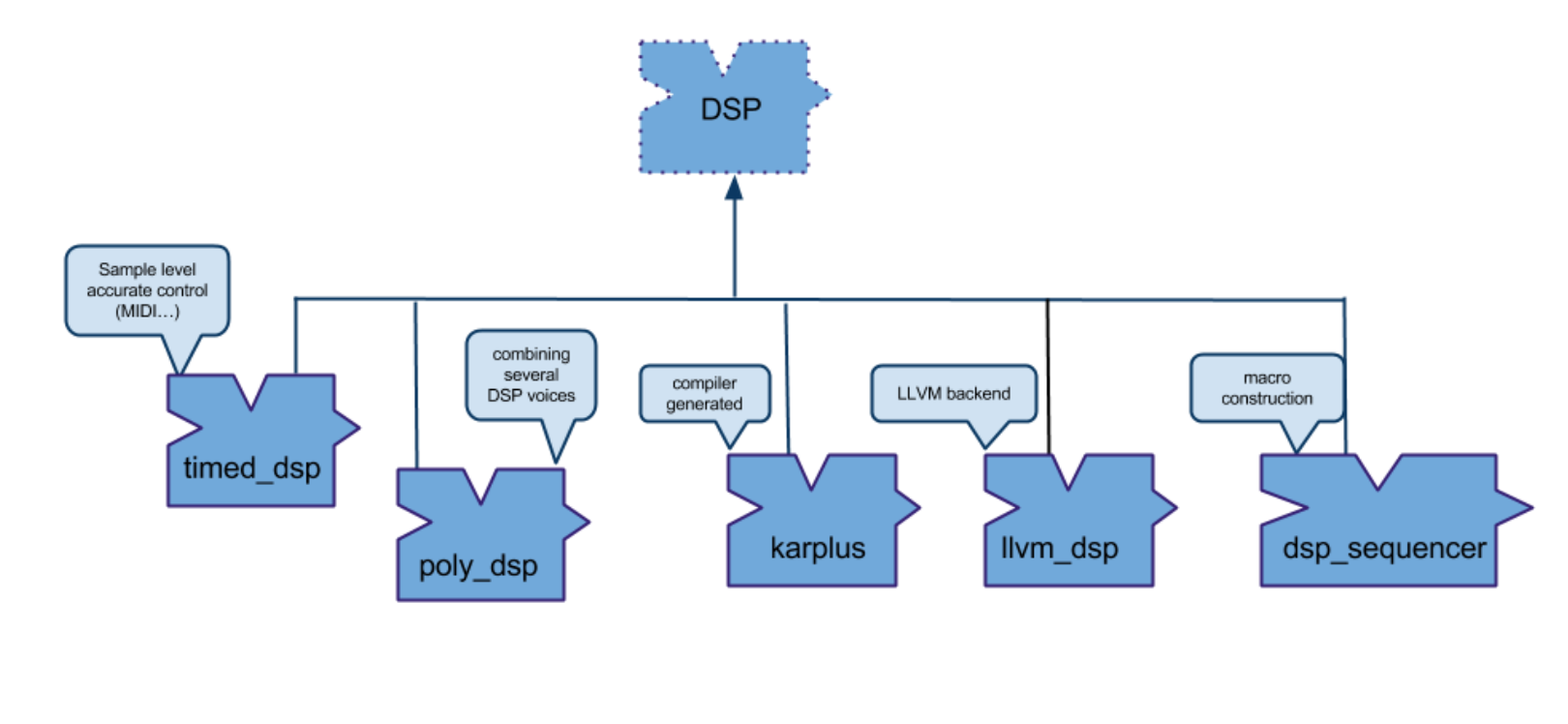

For a given compiled DSP program, the compiler will generate a mydsp subclass of dsp and fill the different methods. For dynamic code producing backends like the LLVM IR, Cmajor or the Interpreter ones, the actual code (an LLVM module, a Cmajor module or a bytecode stream) is actually wrapped by some additional C++ code glue, to finally produces an llvm_dsp typed object (defined in the llvm-dsp.h file), a cmajorpatch_dsp typed object (defined in the cmajorpatch-dsp.h file) or an interpreter_dsp typed object (defined in interpreter-dsp.h file), ready to be used with the UI and audio C++ classes (like the C++ generated class). See the following class diagram:

Changing the generated class name

By default, the generated class is mydsp, but can be changed using the -cn new_name option. Note that any mydsp string used in the architecture class will be automatically replaced by the new_name string. Thus other sections of the architecture file can possibly adapt using the -cn new_name renaming feature.

Global DSP metadata

All global metadata declaration in Faust start with declare, followed by a key and a string. For example:

declare name "Noise";

allows us to specify the name of a Faust program in its whole.

Unlike regular comments, metadata declarations will appear in the C++ code generated by the Faust compiler, for instance the Faust program:

declare name "NoiseProgram";

declare author "MySelf";

declare copyright "MyCompany";

declare version "1.00";

declare license "BSD";

import("stdfaust.lib");

process = no.noise;

will generate the following C++ metadata(Meta* m) method in the dsp class:

void metadata(Meta* m)

{

m->declare("author", "MySelf");

m->declare("compile_options", "-lang cpp -es 1 -scal -ftz 0");

m->declare("copyright", "MyCompany");

m->declare("filename", "metadata.dsp");

m->declare("license", "BSD");

m->declare("name", "NoiseProgram");

m->declare("noises.lib/name", "Faust Noise Generator Library");

m->declare("noises.lib/version", "0.0");

m->declare("version", "1.00");

}

which interacts with an instance of an implementation class of the following virtual Meta class:

struct Meta

{

virtual ~Meta() {};

virtual void declare(const char* key, const char* value) = 0;

};

and are part of three different types of global metadata:

- metadata like

compile_optionsorfilenameare automatically generated - metadata like

authorofcopyrightare part of the Global Medata - metadata like

noises.lib/nameare part of the Function Metadata

Specialized subclasses of theMeta class can be implemented to decode the needed key/value pairs for a given use-case.

Macro Construction of DSP Components

The Faust program specification is usually entirely done in the language itself. But in some specific cases it may be useful to develop separated DSP components and combine them in a more complex setup.

Since taking advantage of the huge number of already available UI and audio architecture files is important, keeping the same dsp API is preferable, so that more complex DSP can be controlled and audio rendered the usual way. Extended DSP classes will typically subclass the dsp base class and override or complete part of its API.

DSP Decorator Pattern

A dsp_decorator class, subclass of the root dsp class has first been defined. Following the decorator design pattern, it allows behavior to be added to an individual object, either statically or dynamically.

As an example of the decorator pattern, the timed_dsp class allows to decorate a given DSP with sample accurate control capability or the mydsp_poly class for polyphonic DSPs, explained in the next sections.

Combining DSP Components

A few additional macro construction classes, subclasses of the root dsp class have been defined in the dsp-combiner.h header file with a five operators construction API:

- the

dsp_sequencerclass combines two DSP in sequence, assuming that the number of outputs of the first DSP equals the number of input of the second one. It somewhat mimics the sequence (that is:) operator of the language by combining two separated C++ objects. ItsbuildUserInterfacemethod is overloaded to group the two DSP in a tabgroup, so that control parameters of both DSPs can be individually controlled. Itscomputemethod is overloaded to call each DSPcomputein sequence, using an intermediate output buffer produced by first DSP as the input one given to the second DSP. - the

dsp_parallelizerclass combines two DSP in parallel. It somewhat mimics the parallel (that is,) operator of the language by combining two separated C++ objects. ItsgetNumInputs/getNumOutputsmethods are overloaded by correctly reflecting the input/output of the resulting DSP as the sum of the two combined ones. ItsbuildUserInterfacemethod is overloaded to group the two DSP in a tabgroup, so that control parameters of both DSP can be individually controlled. Itscomputemethod is overloaded to call each DSP compute, where each DSP consuming and producing its own number of input/output audio buffers taken from the method parameters.

This methology is followed to implement the three remaining composition operators (split, merge, recursion), which ends up with a C++ API to combine DSPs with the usual five operators: createDSPSequencer, createDSPParallelizer, createDSPSplitter, createDSPMerger, createDSPRecursiver to be used at C++ level to dynamically combine DSPs.

And finally the createDSPCrossfader tool allows you to crossfade between two DSP modules. The crossfade parameter (as a slider) controls the mix between the two modules outputs. When Crossfade = 1, the first DSP only is computed, when Crossfade = 0, the second DSP only is computed, otherwise both DSPs are computed and mixed.

Note that this idea of decorating or combining several C++ dsp objects can perfectly be extended in specific projects, to meet other needs: like muting some part of a graph of several DSPs for instance. But keep in mind that keeping the dsp API then allows to take profit of all already available UI and audio based classes.

Sample Accurate Control

DSP audio languages usually deal with several timing dimensions when treating control events and generating audio samples. For performance reasons, systems maintain separated audio rate for samples generation and control rate for asynchronous messages handling.

The audio stream is most often computed by blocks, and control is updated between blocks. To smooth control parameter changes, some languages chose to interpolate parameter values between blocks.

In some cases control may be more finely interleaved with audio rendering, and some languages simply choose to interleave control and sample computation at sample level.

Although the Faust language permits the description of sample level algorithms (i.e., like recursive filters, etc.), Faust generated DSP are usually computed by blocks. Underlying audio architectures give a fixed size buffer over and over to the DSP compute method which consumes and produces audio samples.

Control to DSP Link

In the current version of the Faust generated code, the primary connection point between the control interface and the DSP code is simply a memory zone. For control inputs, the architecture layer continuously write values in this zone, which is then sampled by the DSP code at the beginning of the compute method, and used with the same values throughout the call. Because of this simple control/DSP connexion mechanism, the most recent value is used by the DSP code.

Similarly for control outputs , the DSP code inside the compute method possibly writes several values at the same memory zone, and the last value only will be seen by the control architecture layer when the method finishes.

Although this behaviour is satisfactory for most use-cases, some specific usages need to handle the complete stream of control values with sample accurate timing. For instance keeping all control messages and handling them at their exact position in time is critical for proper MIDI clock synchronisation.

Timestamped Control

The first step consists in extending the architecture control mechanism to deal with timestamped control events. Note that this requires the underlying event control layer to support this capability. The native MIDI API for instance is usually able to deliver timestamped MIDI messages.

The next step is to keep all timestamped events in a time ordered data structure to be continuously written by the control side, and read by the audio side.

Finally the sample computation has to take account of all queued control events, and correctly change the DSP control state at successive points in time.

Slices Based DSP Computation

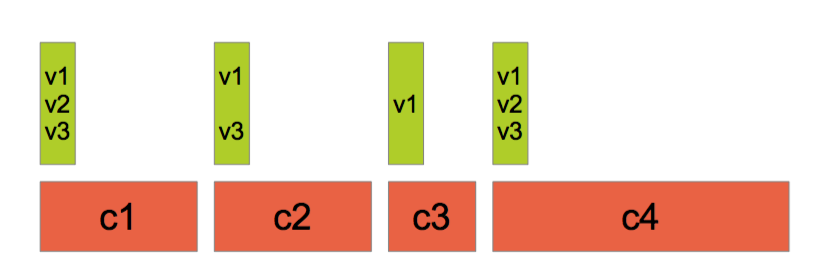

With timestamped control messages, changing control values at precise sample indexes on the audio stream becomes possible. A generic slices based DSP rendering strategy has been implemented in the timed_dsp class.

A ring-buffer is used to transmit the stream of timestamped events from the control layer to the DSP one. In the case of MIDI control for instance, the ring-buffer is written with a pair containing the timestamp expressed in samples (or microseconds) and the actual MIDI message each time one is received. In the DSP compute method, the ring-buffer will be read to handle all messages received during the previous audio block.

Since control values can change several times inside the same audio block, the DSP compute cannot be called only once with the total number of frames and the complete inputs/outputs audio buffers. The following strategy has to be used:

- several slices are defined with control values changing between consecutive slices

- all control values having the same timestamp are handled together, and change the DSP control internal state. The slice is computed up to the next control parameters timestamp until the end of the given audio block is reached

- in the next figure, four slices with the sequence of c1, c2, c3, c4 frames are successively given to the DSP compute method, with the appropriate part of the audio input/output buffers. Control values (appearing here as [v1,v2,v3], then [v1,v3], then [v1], then [v1,v2,v3] sets) are changed between slices

Since timestamped control messages from the previous audio block are used in the current block, control messages are aways handled with one audio buffer latency.

Note that this slices based computation model can always be directly implemented on top of the underlying audio layer, without relying on the timed_dsp wrapper class.

Audio driver timestamping

Some audio drivers can get the timestamp of the first sample in the audio buffers, and will typically call the DSP alternative compute(double date_usec, int count, FAUSTFLOAT** inputs, FAUSTFLOAT** outputs) function with the correct timestamp. By convention timestamp of -1 means 'no timestamp conversion', events already have a timestamp expressed in frames (see jackaudio_midi for an example driver using timestamp expressed in frames). The timed_dsp wrapper class is an example of a DSP class actually using the timestamp information.

Typical Use-Case

A typical Faust program can use the MIDI clock command signal to possibly compute the Beat Per Minutes (BPM) information for any synchronization need it may have.

Here is a simple example of a sinusoid generated which a frequency controlled by the MIDI clock stream, and starting/stopping when receiving the MIDI start/stop messages:

import("stdfaust.lib");

// square signal (1/0), changing state

// at each received clock

clocker = checkbox("MIDI clock[midi:clock]");

// ON/OFF button controlled

// with MIDI start/stop messages

play = checkbox("On/Off [midi:start][midi:stop]");

// detect front

front(x) = (x-x’) != 0.0;

// count number of peaks during one second

freq(x) = (x-x@ma.SR) : + ~ _;

process = os.osc(8*freq(front(clocker))) * play;

Each received group of 24 clocks will move the time position by exactly one beat. Then it is absolutely mandatory to never loose any MIDI clock message and the standard memory zone based model with the use the last received control value semantic is not adapted.

The DSP object that needs to be controlled using the sample-accurate machinery can then simply be decorated using thetimed_dsp class with the following kind of code:

dsp* sample_accurate_dsp = new timed_dsp(DSP);

Note that the described sample accurate MIDI clock synchronization model can currently only be used at input level. Because of the simple memory zone based connection point between the control interface and the DSP code, output controls (like bargraph) cannot generate a stream of control values. Thus a reliable MIDI clock generator cannot be implemented with the current approach.

Polyphonic Instruments

Directly programing polyphonic instruments in Faust is perfectly possible. It is also needed if very complex signal interaction between the different voices have to be described.

But since all voices would always be computed, this approach could be too CPU costly for simpler or more limited needs. In this case describing a single voice in a Faust DSP program and externally combining several of them with a special polyphonic instrument aware architecture file is a better solution. Moreover, this special architecture file takes care of dynamic voice allocations and control MIDI messages decoding and mapping.

Polyphonic ready DSP Code

By convention Faust architecture files with polyphonic capabilities expect to find control parameters named freq, gain, and gate. The metadata declare nvoices "8"; kind of line with a desired value of voices can be added in the source code.

In the case of MIDI control, the freq parameter (which should be a frequency) will be automatically computed from MIDI note numbers, gain (which should be a value between 0 and 1) from velocity and gate from keyon/keyoff events. Thus, gate can be used as a trigger signal for any envelope generator, etc.

Using the mydsp_poly Class

The single voice has to be described by a Faust DSP program, the mydsp_poly class is then used to combine several voices and create a polyphonic ready DSP:

- the poly-dsp.h file contains the definition of the

mydsp_polyclass used to wrap the DSP voice into the polyphonic architecture. This class maintains an array ofdsp*objects, manage dynamic voice allocation, control MIDI messages decoding and mapping, mixing of all running voices, and stopping a voice when its output level decreases below a given threshold - as a subclass of DSP, the

mydsp_polyclass redefines thebuildUserInterfacemethod. By convention all allocated voices are grouped in a global Polyphonic tabgroup. The first tab contains a Voices group, a master like component used to change parameters on all voices at the same time, with a Panic button to be used to stop running voices, followed by one tab for each voice. Graphical User Interface components will then reflect the multi-voices structure of the new polyphonic DSP

The resulting polyphonic DSP object can be used as usual, connected with the needed audio driver, and possibly other UI control objects like OSCUI, httpdUI, etc. Having this new UI hierarchical view allows complete OSC control of each single voice and their control parameters, but also all voices using the master component.

The following OSC messages reflect the same DSP code either compiled normally, or in polyphonic mode (only part of the OSC hierarchies are displayed here):

// Mono mode

/Organ/vol f -10.0

/Organ/pan f 0.0

// Polyphonic mode

/Polyphonic/Voices/Organ/pan f 0.0

/Polyphonic/Voices/Organ/vol f -10.0

...

/Polyphonic/Voice1/Organ/vol f -10.0

/Polyphonic/Voice1/Organ/pan f 0.0

...

/Polyphonic/Voice2/Organ/vol f -10.0

/Polyphonic/Voice2/Organ/pan f 0.0

Note that to save space on the screen, the/Polyphonic/VoiceX/xxx syntax is used when the number of allocated voices is less than 8, then the/Polyphonic/VX/xxx syntax is used when more voices are used.

The polyphonic instrument allocation takes the DSP to be used for one voice, the desired number of voices, the dynamic voice allocation state, and the group state which controls if separated voices are displayed or not:

dsp* poly = new mydsp_poly(dsp, 2, true, true);

With the following code, note that a polyphonic instrument may be used outside of a MIDI control context, so that all voices will be always running and possibly controlled with OSC messages for instance:

dsp* poly = new mydsp_poly(dsp, 8, false, true);

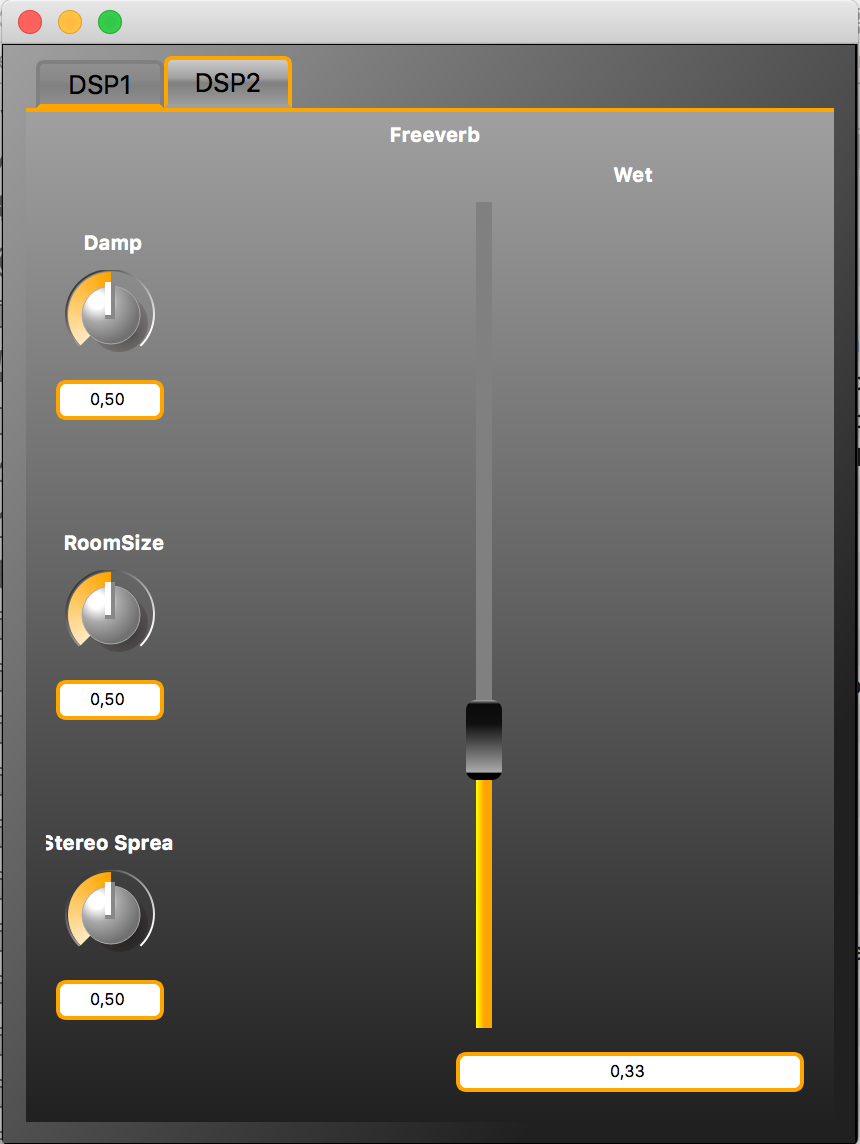

Polyphonic Instrument With a Global Output Effect

Polyphonic instruments may be used with an output effect. Putting that effect in the main Faust code is generally not a good idea since it would be instantiated for each voice which would be very inefficient.

A convention has been defined to use the effect = some effect; line in the DSP source code. The actual effect definition has to be extracted from the DSP code, compiled separately, and then combined using the dsp_sequencer class previously presented to connect the polyphonic DSP in sequence with a unique global effect, with something like:

dsp* poly = new dsp_sequencer(new mydsp_poly(dsp, 2, true, true), new effect());

|

|

Some helper classes like the base dsp_poly_factory class, and concrete implementations llvm_dsp_poly_factory when using the LLVM backend or interpreter_dsp_poly_factory when using the Interpreter backend can also be used to automatically handle the voice and effect part of the DSP.

Controlling the Polyphonic Instrument

The mydsp_poly class is also ready for MIDI control (as a class implementing the midi interface) and can react to keyOn/keyOff and pitchWheel events. Other MIDI control parameters can directly be added in the DSP source code as MIDI metadata. To receive MIDI events, the created polyphonic DSP will be automatically added to the midi_handler object when calling buildUserInterface on a MidiUI object.

Deploying the Polyphonic Instrument

Several architecture files and associated scripts have been updated to handle polyphonic instruments:

As an example on OSX, the script faust2caqt foo.dsp can be used to create a polyphonic CoreAudio/QT application. The desired number of voices is either declared in a nvoices metadata or changed with the -nvoices num additional parameter. MIDI control is activated using the -midi parameter.

The number of allocated voices can possibly be changed at runtime using the-nvoices parameter to change the default value (so using ./foo -nvoices 16 for instance). Several other scripts have been adapted using the same conventions.

faustcaqt -midi -noices 12 inst.dsp -effect effect.dsp

with inst.dsp and effect.dsp in the same folder, and the number of outputs of the instrument matching the number of inputs of the effect, has to be used.

Polyphonic ready faust2xx scripts will then compile the polyphonic instrument and the effect, combine them in sequence, and create a ready-to-use DSP.

Custom Memory Manager

In C and C++, the Faust compiler produces a class (or a struct in C), to be instantiated to create each DSP instance. The standard generation model produces a flat memory layout, where all fields (scalar and arrays) are simply consecutive in the generated code (following the compilation order). So the DSP is allocated on a single block of memory, either on the stack or the heap depending on the use-case. The following DSP program:

import("stdfaust.lib");

gain = hslider("gain", 0.5, 0, 1, 0.01);

feedback = hslider("feedback", 0.8, 0, 1, 0.01);

echo(del_sec, fb, g) = + ~ de.delay(50000, del_samples) * fb * g

with {

del_samples = del_sec * ma.SR;

};

process = echo(1.6, 0.6, 0.7), echo(0.7, feedback, gain);

will have the flat memory layout:

int IOTA0;

int fSampleRate;

int iConst1;

float fRec0[65536];

FAUSTFLOAT fHslider0;

FAUSTFLOAT fHslider1;

int iConst2;

float fRec1[65536];

So scalar fHslider0 and fHslider1 correspond to the gain and feedback controllers. The iConst1 and iConst2 values are typically computed once at init time using the dynamically given the fSampleRate value, and used in the DSP loop later on. The fRec0 and fRec1 arrays are used for the recursive delays and finally the shared IOTA0 index is used to access them.

Here is the generated compute function:

virtual void compute(int count, FAUSTFLOAT** inputs, FAUSTFLOAT** outputs) {

FAUSTFLOAT* input0 = inputs[0];

FAUSTFLOAT* input1 = inputs[1];

FAUSTFLOAT* output0 = outputs[0];

FAUSTFLOAT* output1 = outputs[1];

float fSlow0 = float(fHslider0) * float(fHslider1);

for (int i0 = 0; i0 < count; i0 = i0 + 1) {

fRec0[IOTA0 & 65535]

= float(input0[i0]) + 0.419999987f * fRec0[(IOTA0 - iConst1) & 65535];

output0[i0] = FAUSTFLOAT(fRec0[IOTA0 & 65535]);

fRec1[IOTA0 & 65535]

= float(input1[i0]) + fSlow0 * fRec1[(IOTA0 - iConst2) & 65535];

output1[i0] = FAUSTFLOAT(fRec1[IOTA0 & 65535]);

IOTA0 = IOTA0 + 1;

}

}

The -mem option

On audio boards where the memory is separated as several blocks (like SRAM, SDRAM…) with different access time, it becomes important to refine the DSP memory model so that the DSP structure will not be allocated on a single block of memory, but possibly distributed on all available blocks. The idea is then to allocate parts of the DSP that are often accessed in fast memory and the other ones in slow memory.

The first remark is that scalar values will typically stay in the DSP structure, and the point is to move the big array buffers (fRec0 and fRec1 in the example) into separated memory blocks. The -mem (--memory-manager) option can be used to generate adapted code. On the previous DSP program, we now have the following generated C++ code:

int IOTA0;

int fSampleRate;

int iConst1;

float* fRec0;

FAUSTFLOAT fHslider0;

FAUSTFLOAT fHslider1;

int iConst2;

float* fRec1;

The two fRec0 and fRec1 arrays are becoming pointers, and will be allocated elsewhere.

An external memory manager is needed to interact with the DSP code. The proposed model does the following:

- in a first step the generated C++ code will inform the memory allocator about its needs in terms of 1) number of separated memory zones, with 2) their size 3) access characteristics, like number of Read and Write for each frame computation. This is done be generating an additional static

memoryInfomethod - with the complete information available, the memory manager can then define the best strategy to allocate all separated memory zones

- an additional

memoryCreatemethod is generated to allocate each of the separated zones - an additional

memoryDestroymethod is generated to deallocate each of the separated zones

Here is the API for the memory manager:

struct dsp_memory_manager {

virtual ~dsp_memory_manager() {}

/**

* Inform the Memory Manager with the number of expected memory zones.

* @param count - the number of memory zones

*/

virtual void begin(size_t count);

/**

* Give the Memory Manager information on a given memory zone.

* @param size - the size in bytes of the memory zone

* @param reads - the number of Read access to the zone used to compute one frame

* @param writes - the number of Write access to the zone used to compute one frame

*/

virtual void info(size_t size, size_t reads, size_t writes) {}

/**

* Inform the Memory Manager that all memory zones have been described,

* to possibly start a 'compute the best allocation strategy' step.

*/

virtual void end();

/**

* Allocate a memory zone.

* @param size - the memory zone size in bytes

*/

virtual void* allocate(size_t size) = 0;

/**

* Destroy a memory zone.

* @param ptr - the memory zone pointer to be deallocated

*/

virtual void destroy(void* ptr) = 0;

};

A class static member is added in the mydsp class, to be set with an concrete memory manager later on:

dsp_memory_manager* mydsp::fManager = nullptr;

The C++ generated code now contains a new memoryInfo method, which interacts with the memory manager:

static void memoryInfo() {

fManager->begin(3);

// mydsp

fManager->info(56, 9, 1);

// fRec0

fManager->info(262144, 2, 1);

// fRec1

fManager->info(262144, 2, 1);

fManager->end();

}

The begin method is first generated to inform that three separated memory zones will be needed. Then three consecutive calls to the info method are generated, one for the DSP object itself, one for each recursive delay array. The end method is then called to finish the memory layout description, and let the memory manager prepare the actual allocations.

Note that the memory layout information is also available in the JSON file generated using the -json option, to possibly be used statically by the architecture machinery (that is at compile time). With the previous program, the memory layout section is:

"memory_layout": [

{ "name": "mydsp", "type": "kObj_ptr", "size": 0, "size_bytes": 56, "read": 9, "write": 1 },

{ "name": "IOTA0", "type": "kInt32", "size": 1, "size_bytes": 4, "read": 7, "write": 1 },

{ "name": "iConst1", "type": "kInt32", "size": 1, "size_bytes": 4, "read": 1, "write": 0 },

{ "name": "fRec0", "type": "kFloat_ptr", "size": 65536, "size_bytes": 262144, "read": 2, "write": 1 },

{ "name": "iConst2", "type": "kInt32", "size": 1, "size_bytes": 4, "read": 1, "write": 0 },

{ "name": "fRec1", "type": "kFloat_ptr", "size": 65536, "size_bytes": 262144, "read": 2, "write": 1 }

]

Finally the memoryCreate and memoryDestroy methods are generated. The memoryCreate method asks the memory manager to allocate the fRec0 and fRec1 buffers:

void memoryCreate() {

fRec0 = static_cast<float*>(fManager->allocate(262144));

fRec1 = static_cast<float*>(fManager->allocate(262144));

}

And the memoryDestroy method asks the memory manager to destroy them:

virtual memoryDestroy() {

fManager->destroy(fRec0);

fManager->destroy(fRec1);

}

Additional static create/destroy methods are generated:

static mydsp* create() {

mydsp* dsp = new (fManager->allocate(sizeof(mydsp))) mydsp();

dsp->memoryCreate();

return dsp;

}

static void destroy(dsp* dsp) {

static_cast<mydsp*>(dsp)->memoryDestroy();

fManager->destroy(dsp);

}

Note that the so-called C++ placement new will be used to allocate the DSP object itself.

Static tables

When rdtable or rwtable primitives are used in the source code, the C++ class will contain a table shared by all instances of the class. By default, this table is generated as a static class array, and so allocated in the application global static memory.

Taking the following DSP example:

process = (waveform {10,20,30,40,50,60,70}, %(7)~+(3) : rdtable),

(waveform {1.1,2.2,3.3,4.4,5.5,6.6,7.7}, %(7)~+(3) : rdtable);

Here is the generated code in default mode:

...

static int itbl0mydspSIG0[7];

static float ftbl1mydspSIG1[7];

class mydsp : public dsp {

...

public:

...

static void classInit(int sample_rate) {

mydspSIG0* sig0 = newmydspSIG0();

sig0->instanceInitmydspSIG0(sample_rate);

sig0->fillmydspSIG0(7, itbl0mydspSIG0);

mydspSIG1* sig1 = newmydspSIG1();

sig1->instanceInitmydspSIG1(sample_rate);

sig1->fillmydspSIG1(7, ftbl1mydspSIG1);

deletemydspSIG0(sig0);

deletemydspSIG1(sig1);

}

virtual void init(int sample_rate) {

classInit(sample_rate);

instanceInit(sample_rate);

}

virtual void instanceInit(int sample_rate) {

instanceConstants(sample_rate);

instanceResetUserInterface();

instanceClear();

}

...

}

The two itbl0mydspSIG0 and ftbl1mydspSIG1 tables are static global arrays. They are filled in the classInit method. The architecture code will typically call the init method (which calls classInit) on a given DSP, to allocate class related arrays and the DSP itself. If several DSPs are going to be allocated, calling classInit only once then the instanceInit method on each allocated DSP is the way to go.

In the -mem mode, the generated C++ code is now:

...

static int* itbl0mydspSIG0 = 0;

static float* ftbl1mydspSIG1 = 0;

class mydsp : public dsp {

...

public:

...

static dsp_memory_manager* fManager;

static void classInit(int sample_rate) {

mydspSIG0* sig0 = newmydspSIG0(fManager);

sig0->instanceInitmydspSIG0(sample_rate);

itbl0mydspSIG0 = static_cast<int*>(fManager->allocate(28));

sig0->fillmydspSIG0(7, itbl0mydspSIG0);

mydspSIG1* sig1 = newmydspSIG1(fManager);

sig1->instanceInitmydspSIG1(sample_rate);

ftbl1mydspSIG1 = static_cast<float*>(fManager->allocate(28));

sig1->fillmydspSIG1(7, ftbl1mydspSIG1);

deletemydspSIG0(sig0, fManager);

deletemydspSIG1(sig1, fManager);

}

static void classDestroy() {

fManager->destroy(itbl0mydspSIG0);

fManager->destroy(ftbl1mydspSIG1);

}

virtual void init(int sample_rate) {}

virtual void instanceInit(int sample_rate) {

instanceConstants(sample_rate);

instanceResetUserInterface();

instanceClear();

}

...

}

The two itbl0mydspSIG0 and ftbl1mydspSIG1 tables are generated as static global pointers. The classInit method uses the fManager object used to allocate tables. A new classDestroy method is generated to deallocate the tables. Finally the init method is now empty, since the architecture file is supposed to use the classInit/classDestroy method once to allocate and deallocate static tables, and the instanceInit method on each allocated DSP.

The memoryInfo method now has the following shape, with the two itbl0mydspSIG0 and ftbl1mydspSIG1 tables:

static void memoryInfo() {

fManager->begin(6);

// mydspSIG0

fManager->info(4, 0, 0);

// itbl0mydspSIG0

fManager->info(28, 1, 0);

// mydspSIG1

fManager->info(4, 0, 0);

// ftbl1mydspSIG1

fManager->info(28, 1, 0);

// mydsp

fManager->info(28, 0, 0);

// iRec0

fManager->info(8, 3, 2);

fManager->end();

}

Defining and using a custom memory manager

When compiled with the -mem option, the client code has to define an adapted memory_manager class for its specific needs. A cutom memory manager is implemented by subclassing the dsp_memory_manager abstract base class, and defining the begin, end, ìnfo, allocate and destroy methods. Here is an example of a simple heap allocating manager (implemented in the dummy-mem.cpp architecture file):

struct malloc_memory_manager : public dsp_memory_manager {

virtual void begin(size_t count)

{

// TODO: use ‘count’

}

virtual void end()

{

// TODO: start sorting the list of memory zones, to prepare

// for the future allocations done in memoryCreate()

}

virtual void info(size_t size, size_t reads, size_t writes)

{

// TODO: use 'size', ‘reads’ and ‘writes’

// to prepare memory layout for allocation

}

virtual void* allocate(size_t size)

{

// TODO: refine the allocation scheme to take

// in account what was collected in info

return calloc(1, size);

}

virtual void destroy(void* ptr)

{

// TODO: refine the allocation scheme to take

// in account what was collected in info

free(ptr);

}

};

The specialized malloc_memory_manager class can now be used the following way:

// Allocate a global static custom memory manager

static malloc_memory_manager gManager;

// Setup the global custom memory manager on the DSP class

mydsp::fManager = &gManager;

// Make the memory manager get information on all subcontainers,

// static tables, DSP and arrays and prepare memory allocation

mydsp::memoryInfo();

// Done once before allocating any DSP, to allocate static tables

mydsp::classInit(44100);

// ‘Placement new’ and 'memoryCreate' are used inside the ‘create’ method

dsp* DSP = mydsp::create();

// Init the DSP instance

DSP->instanceInit(44100);

...

... // use the DSP

...

// 'memoryDestroy' and memory manager 'destroy' are used to deallocate memory

mydsp::destroy();

// Done once after the last DSP has been destroyed

mydsp::classDestroy();

Note that the client code can still choose to allocate/deallocate the DSP instance using the regular C++ new/delete operators:

// Allocate a global static custom memory manager

static malloc_memory_manager gManager;

// Setup the global custom memory manager on the DSP class

mydsp::fManager = &gManager;

// Make the memory manager get information on all subcontainers,

// static tables, DSP and arrays and prepare memory allocation

mydsp::memoryInfo();

// Done once before allocating any DSP, to allocate static tables

mydsp::classInit(44100);

// Use regular C++ new

dsp* DSP = new mydsp();

/// Allocate internal buffers

DSP->memoryCreate();

// Init the DSP instance

DSP->instanceInit(44100);

...

... // use the DSP

...

// Deallocate internal buffers

DSP->memoryDestroy();

// Use regular C++ delete

delete DSP;

// Done once after the last DSP has been destroyed

mydsp::classDestroy();

Or even on the stack with:

...

// Allocation on the stack

mydsp DSP;

// Allocate internal buffers

DSP.memoryCreate();

// Init the DSP instance

DSP.instanceInit(44100);

...

... // use the DSP

...

// Deallocate internal buffers

DSP.memoryDestroy();

...

More complex custom memory allocators can be developed by refining this malloc_memory_manager example, possibly defining real-time memory allocators...etc... The OWL architecture file uses a custom OwlMemoryManager.

Allocating several DSP instances

In a multiple instances scheme, static data structures shared by all instances have to be allocated once at beginning using mydsp::classInit, and deallocated at the end using mydsp::classDestroy. Individual instances are then allocated with mydsp::create() and deallocated with mydsp::destroy(), possibly directly using regular new/delete, or using stack allocation as explained before.

Measuring the DSP CPU

The measure_dsp class defined in the faust/dsp/dsp-bench.h file allows to decorate a given DSP object and measure its compute method CPU consumption. Results are given in Megabytes/seconds (higher is better) and DSP CPU at 44,1 kHz. Here is a C++ code example of its use:

static void bench(dsp* dsp, const string& name)

{

// Init the DSP

dsp->init(48000);

// Wraps it with a 'measure_dsp' decorator

measure_dsp mes(dsp, 1024, 5);

// Measure the CPU use

mes.measure();

// Returns the Megabytes/seconds and relative standard deviation values

std::pair<double, double> res = mes.getStats();

// Print the stats

cout << name << " MBytes/sec : " << res.first

<< " " << "(DSP CPU % : " << (mes.getCPULoad() * 100) << ")" << endl;

}

Defined in the faust/dsp/dsp-optimizer.h file, the dsp_optimizer class uses the libfaust library and its LLVM backend to dynamically compile DSP objects produced with different Faust compiler options, and then measure their DSP CPU. Here is a C++ code example of its use:

static void dynamic_bench(const string& in_filename)

{

// Init the DSP optimizer with the in_filename to compile

dsp_optimizer optimizer(in_filename, 0, nullptr, "", 1024);

// Discover the best set of parameters

tuple<double, double, double, TOption> res = optimizer.findOptimizedParameters();

cout << "Best value for '" << in_filename << "' is : "

<< get<0>(res) << " MBytes/sec with ";

for (size_t i = 0; i < get<3>(res).size(); i++) {

cout << get<3>(res)[i] << " ";

}

cout << endl;

}

This class can typically be used in tools that help developers discover the best Faust compilation parameters for a given DSP program, like the faustbench and faustbench-llvm tools.

The Proxy DSP Class

In some cases, a DSP may run outside of the application or plugin context, like on another machine. The proxy_dsp class allows to create a proxy DSP that will be finally connected to the real one (using an OSC or HTTP based machinery for instance), and will reflect its behaviour. It uses the previously described JSONUIDecoder class. Then the proxy_dsp can be used in place of the real DSP, and connected with UI controllers using the standard buildUserInterface to control it.

The faust-osc-controller tool demonstrates this capability using an OSC connection between the real DSP and its proxy. The proxy_osc_dsp class implements a specialized proxy_dsp using the liblo OSC library to connect to a OSC controllable DSP (which is using the OSCUI class and running in another context or machine). Then the faust-osc-controller program creates a real GUI (using GTKUI in this example) and have it control the remote DSP and reflect its dynamic state (like vumeter values coming back from the real DSP).

Embedded Platforms

Faust has been targeting an increasing number of embedded platforms for real-time audio signal processing applications in recent years. It can now be used to program microcontrollers (i.e., ESP32, Teensy, Pico DSP and Daisy), mobile platforms, embedded Linux systems (i.e., Bela and Elk), Digital Signal Processors (DSPs), and more. Specialized architecture files and faust2xx scripts have been developed.

Metadata Naming Convention

A specific question arises when dealing with devices without or limited screen to display any GUI, and a set of physical knobs or buttons to be connected to control parameters. The standard way is then to use metadata in control labels. Since beeing able to use the same DSP file on all devices is always desirable, a common set of metadata has been defined:

- [switch:N] is used to connect to switch buttons

- [knob:N] is used to connect to knobs

A extended set of metadata will probably have to be progressively defined and standardized.

Using the -uim Compiler Option

On embedded platforms with limited capabilities, using the -uim option can be helpful. The C/C++ generated code then contains a static description of several caracteristics of the DSP, like the number of audio inputs/outputs, the number of controls inputs/outputs, and macros feed with the controls parameters (label, DSP field name, init, min, max, step) that can be implemented in the architecture file for various needs.

For example the following DSP program:

process = _*hslider("Gain", 0, 0, 1, 0.01) : hbargraph("Vol", 0, 1);

compiled with faust -uim foo.dsp gives this additional section:

#ifdef FAUST_UIMACROS

#define FAUST_FILE_NAME "foo.dsp"

#define FAUST_CLASS_NAME "mydsp"

#define FAUST_INPUTS 1

#define FAUST_OUTPUTS 1

#define FAUST_ACTIVES 1

#define FAUST_PASSIVES 1

FAUST_ADDHORIZONTALSLIDER("Gain", fHslider0, 0.0f, 0.0f, 1.0f, 0.01f);

FAUST_ADDHORIZONTALBARGRAPH("Vol", fHbargraph0, 0.0f, 1.0f);

#define FAUST_LIST_ACTIVES(p) \

p(HORIZONTALSLIDER, Gain, "Gain", fHslider0, 0.0f, 0.0f, 1.0f, 0.01f) \

#define FAUST_LIST_PASSIVES(p) \

p(HORIZONTALBARGRAPH, Vol, "Vol", fHbargraph0, 0.0, 0.0f, 1.0f, 0.0) \

#endif

The FAUST_ADDHORIZONTALSLIDER or FAUST_ADDHORIZONTALBARGRAPH macros can then be implemented to do whatever is needed with the Gain", fHslider0, 0.0f, 0.0f, 1.0f, 0.01f and "Vol", fHbargraph0, 0.0f, 1.0f parameters respectively.

The more sophisticated FAUST_LIST_ACTIVES and FAUST_LIST_PASSIVES macros can possibly be used to call any p function (defined elsewhere in the architecture file) on each item. The minimal-static.cpp file demonstrates this feature.

Developing a New Architecture File

Developing a new architecture file typically means writing a generic file, that will be populated with the actual output of the Faust compiler, in order to produce a complete file, ready-to-be-compiled as a standalone application or plugin.

The architecture to be used is specified at compile time with the -a option. It must contain the <<includeIntrinsic>> and <<includeclass>> lines that will be recognized by the Faust compiler, and replaced by the generated code. Here is an example in C++, but the same logic can be used with other languages producing textual outputs, like C, Cmajor, Rust or Dlang.

Look at the minimal.cpp example located in the architecture folder:

#include <iostream>

#include "faust/gui/PrintUI.h"

#include "faust/gui/meta.h"

#include "faust/audio/dummy-audio.h"

#include "faust/dsp/one-sample-dsp.h"

// To be replaced by the compiler generated C++ class

<<includeIntrinsic>>

<<includeclass>>

int main(int argc, char* argv[])

{

mydsp DSP;

std::cout << "DSP size: " << sizeof(DSP) << " bytes\n";

// Activate the UI, here that only print the control paths

PrintUI ui;

DSP.buildUserInterface(&ui);

// Allocate the audio driver to render 5 buffers of 512 frames

dummyaudio audio(5);

audio.init("Test", static_cast<dsp*>(&DSP));

// Render buffers...

audio.start();

audio.stop();

}

Calling faust -a minimal.cpp noise.dsp -o noise.cpp will produce a ready to compile noise.cpp file:

/* ------------------------------------------------------------

name: "noise"

Code generated with Faust 2.28.0 (https://faust.grame.fr)

Compilation options: -lang cpp -scal -ftz 0